Introduction

In the realm of machine learning, staying efficient yet effective is key. Parameter-efficient Fine-tuning (PEFT) emerges as a crucial technique, promising streamlined models without sacrificing performance. Let’s delve into what PEFT entails and why it’s gaining traction in the field.

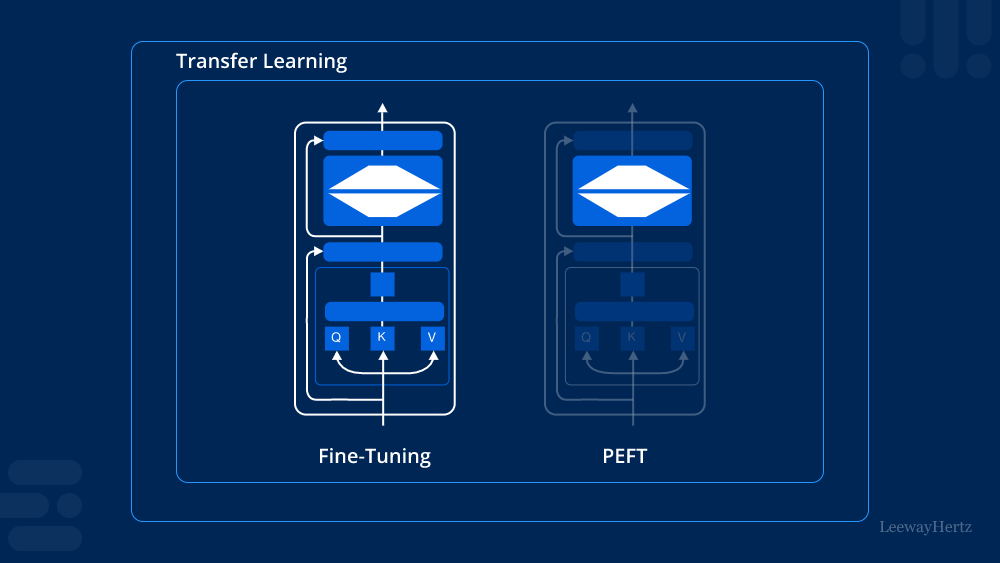

Understanding Parameter-efficient Fine-tuning (PEFT)

Parameter-efficient Fine-tuning (PEFT) is a methodology aimed at optimizing and fine-tuning pre-existing machine learning models with minimal additional parameters. Its core objective is to enhance model performance on specific tasks while keeping computational costs and memory footprint manageable.

The Importance of Efficiency in Machine Learning

Efficiency in machine learning is multidimensional. It encompasses computational efficiency, where models execute tasks swiftly and with minimal hardware resources. Parameter efficiency relates to how effectively models utilize their trainable parameters, ensuring they are optimized for specific tasks without unnecessary bloat.

How PEFT Works

PEFT typically involves several key steps:

- Transfer Learning Foundation: Utilizing a pre-trained model as a starting point, which has already learned from vast datasets, saves time and computational resources.

- Task-specific Fine-tuning: Fine-tuning involves adjusting the pre-trained model’s parameters to fit the nuances of the new task. PEFT focuses on judicious parameter adjustments rather than wholesale changes, maintaining efficiency.

- Regularization Techniques: Implementing regularization methods like weight decay or dropout helps prevent overfitting during fine-tuning, ensuring the model generalizes well to new data.

Advantages of PEFT

- Reduced Computational Costs: By leveraging pre-trained models and minimizing additional parameters, PEFT lowers the computational burden compared to training models from scratch.

- Faster Deployment: Fine-tuning a pre-trained model is quicker than training a new one, making PEFT ideal for applications requiring rapid deployment.

- Improved Performance: Despite its efficiency, PEFT often enhances model performance on specific tasks by leveraging the learned features of pre-trained models effectively.

Applications of PEFT

PEFT finds applications across various domains:

- Natural Language Processing (NLP): Fine-tuning transformer models like BERT for sentiment analysis or named entity recognition tasks.

- Computer Vision: Enhancing object detection or image classification models by fine-tuning pre-trained convolutional neural networks (CNNs).

- Recommendation Systems: Optimizing collaborative filtering models with PEFT to personalize recommendations based on user behavior.

Challenges and Considerations

While PEFT offers compelling advantages, it’s not without challenges:

- Task-specific Adaptation: Ensuring the pre-trained model adapts effectively to the nuances of the new task requires careful fine-tuning.

- Domain Shifts: Models fine-tuned on specific datasets may struggle with performance if applied to significantly different data distributions.

- Ethical Considerations: As with any machine learning technique, ethical considerations regarding bias, fairness, and privacy must be addressed during model development and deployment.

Future Directions

The future of PEFT lies in:

- Advanced Regularization Techniques: Further refining regularization methods to enhance model generalization.

- Hybrid Approaches: Exploring hybrid models that combine aspects of both PEFT and other efficient learning paradigms like distillation.

- Automated Fine-tuning Strategies: Developing automated tools to streamline the PEFT process, making it more accessible to developers and researchers.

Conclusion

Parameter-efficient Fine-tuning (PEFT) stands at the forefront of efficient machine learning techniques, offering a balance between computational effectiveness and model performance enhancement. As the demand for rapid, scalable AI solutions grows, mastering PEFT will be invaluable in building robust applications across diverse domains. By leveraging existing knowledge and optimizing parameters judiciously, PEFT represents a pivotal step towards a more efficient and sustainable AI landscape.

Leave a comment