Introduction

As artificial intelligence (AI) continues to advance, the importance of AI model security cannot be overstated. With AI systems becoming integral to various sectors, from healthcare to finance, ensuring their security is crucial. This article explores the fundamental aspects of AI model security, including its significance, common threats, and strategies to safeguard AI models.

Understanding AI Model Security

AI model security refers to the practices and measures put in place to protect AI models from threats that could compromise their functionality, integrity, or confidentiality. This includes safeguarding against attacks that could manipulate, corrupt, or otherwise undermine the model’s performance. As AI models become more prevalent, the need for robust AI model security strategies grows.

The Importance of AI Model Security

AI models are increasingly used in sensitive and critical applications. For instance, in healthcare, AI systems help in diagnosing diseases and recommending treatments. In finance, they are used for fraud detection and algorithmic trading. The impact of a compromised AI model in these scenarios could be disastrous, leading to incorrect diagnoses or financial losses. Hence, implementing AI model security measures is essential to protect against such risks and ensure the reliability and trustworthiness of AI systems.

Common Threats to AI Models

Several types of threats can jeopardize AI model security. Understanding these threats helps in developing effective security strategies.

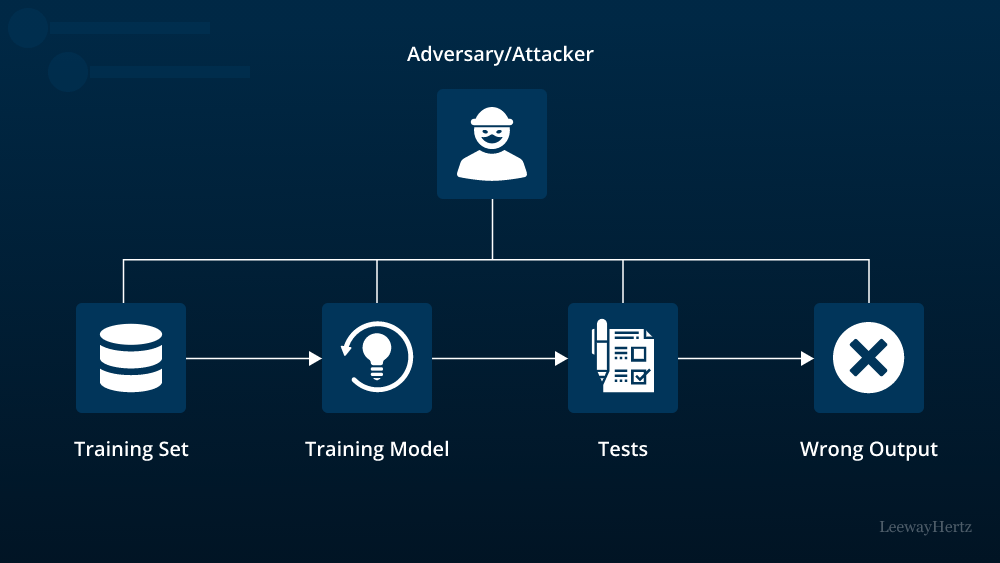

1. Adversarial Attacks

Adversarial attacks involve manipulating input data to deceive the AI model into making incorrect predictions. For example, slight alterations to an image can lead a model to misclassify it. These attacks can undermine the reliability of AI models, particularly in safety-critical applications like autonomous driving.

2. Model Theft

Model theft occurs when malicious actors steal an AI model’s intellectual property, which can then be used for unauthorized purposes or to replicate the model’s functionality without permission. This poses risks to both proprietary technology and competitive advantage.

3. Data Poisoning

In data poisoning attacks, attackers introduce malicious data into the training set, which can degrade the model’s performance. This can lead to incorrect outputs and reduce the overall effectiveness of the AI system.

4. Inference Attacks

Inference attacks involve extracting sensitive information from an AI model’s outputs. For instance, attackers might infer confidential data about individuals by querying the model. This can lead to privacy breaches and unauthorized access to sensitive information.

Strategies for Enhancing AI Model Security

To protect against these threats, implementing effective AI model security strategies is crucial. Here are some key measures:

1. Robust Training and Testing

Ensuring the robustness of AI models through rigorous training and testing can help mitigate adversarial attacks and data poisoning. Techniques such as adversarial training, where the model is exposed to adversarial examples during training, can improve its resilience to these threats.

2. Model Encryption

Encrypting AI models helps protect them from theft and unauthorized access. Model encryption involves converting the model’s parameters into a secure format that can only be accessed by authorized entities. This is particularly important for protecting proprietary algorithms and sensitive intellectual property.

3. Regular Audits and Monitoring

Regularly auditing and monitoring AI models helps detect and address potential security issues. This includes monitoring for unusual patterns that might indicate an attack or vulnerability. Continuous vigilance ensures that security measures are up-to-date and effective against emerging threats.

4. Data Privacy Measures

Implementing data privacy measures is essential for protecting against inference attacks. Techniques such as differential privacy, which adds noise to the data, can help safeguard sensitive information while still allowing the AI model to function effectively.

5. Access Controls and Authentication

Enforcing strict access controls and authentication protocols ensures that only authorized personnel can interact with the AI model. This reduces the risk of unauthorized modifications or access to sensitive model information.

Conclusion

As AI systems become increasingly integral to various industries, AI model security must be a top priority. By understanding the common threats and implementing robust security measures, organizations can protect their AI models from potential risks. Ensuring AI model security not only safeguards sensitive information and proprietary technology but also maintains the reliability and trustworthiness of AI systems. As the field of AI continues to evolve, staying informed about emerging threats and best practices for AI model security will be crucial for maintaining a secure and effective AI infrastructure.

Leave a comment