Large Language Models (LLMs) have revolutionized how we interact with artificial intelligence, enabling more natural and dynamic communication. However, one of the most significant advancements in this field is the ability to generate structured outputs in LLMs. This feature not only enhances the utility of these models but also makes them more relevant for specific tasks that require precise and organized information. In this article, we will explore the concept of structured outputs in LLMs, their benefits, and how they are optimized for various applications.

What Are Structured Outputs in LLMs?

Structured outputs in LLMs refer to the ability of these models to produce data in a predefined format, such as tables, lists, or JSON objects, rather than plain, unstructured text. This capability is crucial for tasks where specific formatting and organization of information are required, such as data extraction, report generation, and response formatting for applications like chatbots and virtual assistants.

How Structured Outputs Enhance LLM Capabilities

- Precision and Accuracy: Structured outputs allow LLMs to deliver more precise and accurate results, as the data is organized in a format that is easier to validate and interpret. For instance, when extracting information from a large text corpus, structured outputs enable the model to present the results in an organized manner, making it simpler to identify relevant details.

- Task-Specific Customization: By generating structured outputs, LLMs can be tailored to specific tasks. For example, in customer service, a model can be designed to produce structured responses that fit into predefined categories such as “Order Status,” “Technical Support,” or “Billing Inquiries.” This not only improves the efficiency of the model but also enhances the user experience by providing clear and relevant information.

- Integration with Other Systems: Structured outputs in LLMs facilitate seamless integration with other software systems and APIs. Since the outputs are formatted in a standardized way, they can be easily consumed by downstream applications, enabling automated workflows and real-time data processing. This is particularly useful in industries like finance, healthcare, and e-commerce, where data interoperability is crucial.

Applications of Structured Outputs in LLMs

The ability to produce structured outputs significantly broadens the application scope of LLMs. Here are some key areas where this feature is making a substantial impact:

1. Data Extraction and Analysis

Structured outputs in LLMs are extensively used in data extraction tasks, such as pulling specific details from large documents, databases, or unstructured text sources. For example, a legal professional might use an LLM to extract clauses from contracts and present them in a tabular format for easy comparison. Similarly, researchers can use structured outputs to compile data from multiple studies into a unified dataset, facilitating deeper analysis.

2. Content Generation for Reports and Summaries

LLMs with structured output capabilities can generate content that fits into specific templates, such as business reports, summaries, or even news articles. This ensures consistency and clarity in the generated content, making it more useful for professionals who rely on structured documents for decision-making and communication.

3. Enhanced Customer Support Systems

In customer support, structured outputs allow LLMs to respond to inquiries in a more organized manner. For instance, if a user asks about their account status, the LLM can provide a structured response that includes their account details, recent transactions, and any pending actions, all in a clear, easy-to-read format. This not only improves the efficiency of the support system but also enhances user satisfaction by providing quick and relevant answers.

4. Automated Coding and Development

For developers, LLMs with structured outputs can assist in generating code snippets, debugging information, or even entire scripts in a structured format. This is particularly valuable in automated coding tools where the consistency and accuracy of the output are critical for successful deployment.

Optimizing LLMs for Structured Outputs

To maximize the benefits of structured outputs in LLMs, several optimization techniques can be employed:

- Fine-Tuning on Specific Data: Fine-tuning LLMs on domain-specific datasets can help the models learn the nuances of the required output format. This involves training the LLM on examples of the desired structured output, which improves its ability to generate similar structures in future tasks.

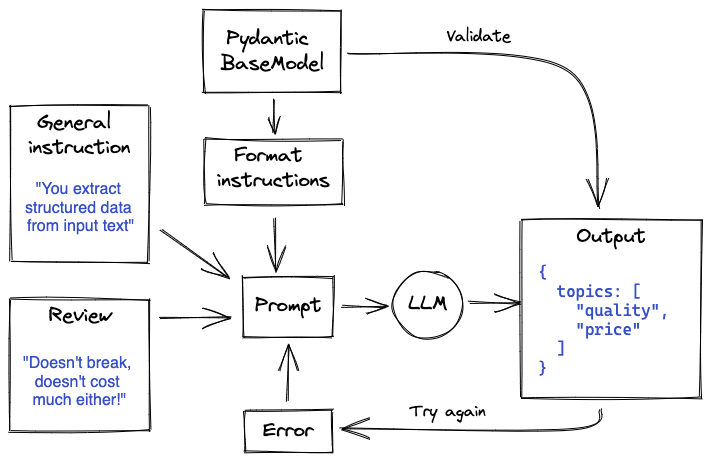

- Prompt Engineering: Crafting precise prompts is another way to guide LLMs towards producing structured outputs. By providing clear instructions and examples within the prompt, users can influence the model’s response to match the desired structure.

- Use of Output Constraints: Implementing output constraints, such as rules or templates, can further refine the structured outputs. This approach helps ensure that the LLM adheres to the required format, reducing errors and increasing reliability.

Conclusion

Structured outputs in LLMs represent a significant advancement in the field of artificial intelligence, making these models more versatile and effective for a wide range of applications. By producing organized and task-specific information, LLMs can enhance precision, improve user experiences, and integrate more seamlessly with other systems. As these technologies continue to evolve, the ability to generate structured outputs will likely become a standard feature, driving further innovation and efficiency across various industries.

Understanding and leveraging structured outputs in LLMs is essential for anyone looking to harness the full potential of large language models in their workflows. Whether for data extraction, content generation, customer support, or software development, structured outputs provide a clear path to more efficient and reliable AI-driven solutions.

Leave a comment