Introduction

In the evolving world of artificial intelligence (AI), achieving optimal performance often hinges on fine-tuning the algorithms that drive it. One crucial aspect of this process is hyperparameter tuning. This article explores the impact of hyperparameter tuning on AI, shedding light on how it enhances model performance and helps in realizing the full potential of AI systems.

Understanding Hyperparameters

Before delving into their impact, it’s essential to grasp what hyperparameters are. Unlike model parameters, which are learned during training, hyperparameters are set before the learning process begins. They control various aspects of the model training process, such as learning rates, the number of layers in a neural network, or the size of the batch during training.

The Role of Hyperparameter Tuning

Hyperparameter tuning involves searching for the best combination of these parameters to optimize the model’s performance. The impact of hyperparameter tuning on AI can be profound. Properly tuned hyperparameters can significantly improve the model’s accuracy, generalization ability, and overall effectiveness.

Enhanced Model Performance

One of the primary impacts of hyperparameter tuning on AI is enhanced model performance. By adjusting hyperparameters, practitioners can minimize overfitting or underfitting. For instance, in a neural network, choosing the right learning rate can prevent the model from converging too quickly to a suboptimal solution or taking too long to learn.

Increased Generalization

Hyperparameter tuning also plays a crucial role in improving a model’s ability to generalize to new, unseen data. For example, setting the right regularization parameters can help in preventing the model from memorizing the training data, thereby improving its performance on real-world data. This aspect of tuning is vital as it ensures that the AI model performs well not just on the training set but also on new, unseen data.

Optimizing Training Time

Efficient training is another benefit of hyperparameter tuning. Properly tuned hyperparameters can lead to faster convergence during training. This is particularly important in large-scale AI projects where computational resources and time are often limited. By optimizing hyperparameters, one can reduce the number of epochs required for training, thereby saving time and computational power.

Automating Hyperparameter Tuning

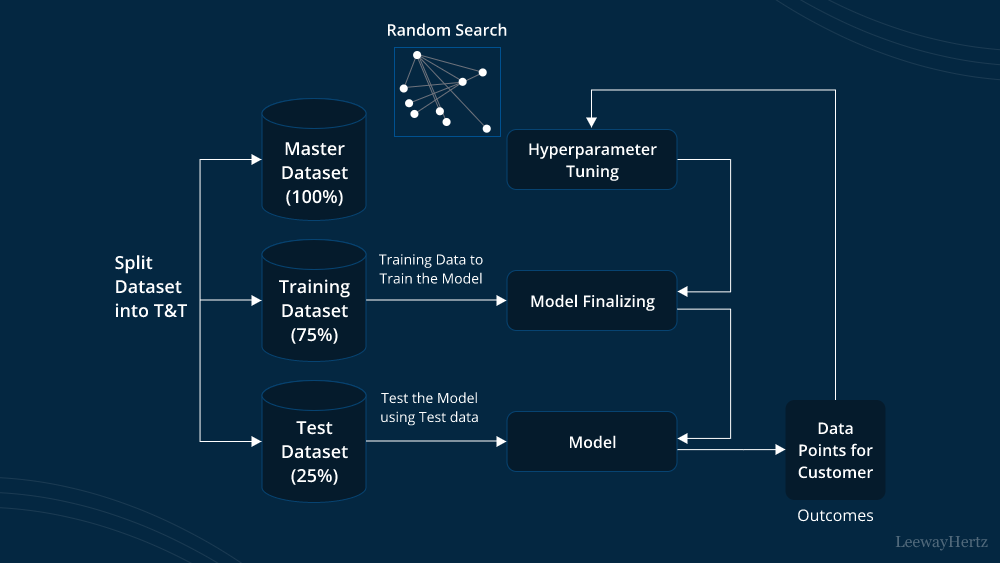

The impact of hyperparameter tuning on AI has led to the development of various automated methods. Techniques like grid search, random search, and more advanced methods like Bayesian optimization can automate the tuning process. These methods explore different combinations of hyperparameters to find the optimal settings more efficiently. Automated tuning not only accelerates the process but also helps in finding better-performing models.

Challenges in Hyperparameter Tuning

Despite its benefits, hyperparameter tuning comes with its own set of challenges. The search space for hyperparameters can be vast, making it computationally expensive and time-consuming to explore all possible combinations. Moreover, the tuning process can sometimes lead to overfitting on the validation set, necessitating careful monitoring and validation.

Best Practices for Effective Tuning

To maximize the impact of hyperparameter tuning on AI, practitioners should follow best practices:

- Start with a Baseline: Begin by establishing a baseline model with default hyperparameters. This provides a reference point for evaluating improvements.

- Use Cross-Validation: Implement cross-validation techniques to ensure that the tuning process is robust and not overfitting to a particular subset of data.

- Leverage Automated Tools: Utilize automated hyperparameter tuning tools to efficiently explore the search space and find optimal settings.

- Monitor Performance: Continuously monitor model performance metrics during the tuning process to ensure that improvements are genuine and not just artifacts of the validation process.

- Iterate and Refine: Hyperparameter tuning is an iterative process. Refine the search based on initial results to hone in on the best hyperparameters.

Conclusion

The impact of hyperparameter tuning on AI is significant, influencing model accuracy, generalization, and efficiency. By carefully selecting and optimizing hyperparameters, AI practitioners can unlock the full potential of their models, leading to more accurate and reliable results. While the process can be complex and resource-intensive, the benefits in terms of model performance and training efficiency make it a crucial step in developing advanced AI systems. As AI continues to evolve, mastering hyperparameter tuning will remain a key factor in achieving cutting-edge performance and innovation.

Leave a comment